Which LLM to choose for GenAI in Finance

Story 3/3: A guide to help you decide the right LLM for your project.

This story is part of a three-week sequence. This time, “Which LLM to choose for GenAI in Finance,” Part 2/3:

Introduction of TinyLLM in Finance: The first story of the sequence introduces TinyLLMs and their importance in finance.

TinyLLM in Action: The second story of the sequence will introduce a use case of implementing a TinyLLM on your local laptop or PC.

Which LLM to choose for GenAI in Finance: The last story of the sequence will discuss several LLMs (Proprietary and Open-Source) and the decision-making process.

In the first story of the series, I introduced TinyLLMs in Finance, what they are, and their potential impact on Finance.

In the second story of the series, I introduced how to implement a TinyLLM in Finance by providing an important explanation and a structured code to make it easy for you to reproduce the example.

In the last story of this series, I will focus mainly on the following three parts:

Which LLMs or TinyLLMs are the most effective for Finance and why?

Which LLMs or TinyLLMs should be used for which project and why?

When to choose between context-based learning (RAG) and fine-tuning.

I. Presentation of LLMs and TinyLLMs relevant for Finance

The following LLMs and TinyLLms are going to be presented:

Mistral

Zephyr

FinGPT

BloomGPT

DeepSeek

Most models have sizes ranging from 1B to 70B (B=Billion) parameters. Depending on your infrastructure and the use cases to be implemented, you need smaller or larger LLMs. Here is a small rule:

The more general knowledge needed for your task, the more complex the task, the more “billion parameters” are needed.

Example: If you want to deploy an LLM for coding and only asking simple functions, go for 1B. If you are seeking for more complex coding tasks, such as undertanding interactions between SQL tables, code classes etc… go for a bigger model such as 7B or 30B.

If you have a large GPU cluster and money is no object, go for the largest model of specialised LLM as you will get amazing performance with good response times.

Mistral

Mistral 7B is a notable large language model. It has been noted for its performance, outperforming models like Llama 2 13B across various benchmarks. Mistral 7B's capabilities include being easily fine-tuned for different tasks, such as chat applications, and it shows good performance in areas like commonsense reasoning, world knowledge, and reading comprehension.

Zephyr

Like Mistral, Zephyr is a well-known name in the realm of LLMs. It is a conversational AI model trained on a massive dataset of text and code. It can engage in open-ended, fluent, and informative conversations, adapting to the context of the conversation and responding to user queries thoughtfully. Zephyr's ability to understand and generate human-like language makes it a valuable tool for customer service, education, and healthcare applications.

FinGPT

FinGPT stands for Financial GPT. It aims to democratize access to financial data, serving as a significant driver of innovation in open finance. FinGPT offers various models and datasets, emphasizing applications like sentiment analysis, robo-advising, and stock trading. The organization has adopted an Enterprise version, requiring a monthly subscription for contributors who can join by contributing models or data.

BloomGPT

BloombergGPT is a 50 billion parameter large language model specifically developed for the financial sector by Bloomberg. It was trained on diverse financial data, including a vast collection of financial documents curated by Bloomberg over 40 years. This model represents a significant step in AI for finance, with its training corpus consisting of over 700 billion tokens, combining Bloomberg's extensive financial data with public datasets.

BloombergGPT aims to improve various financial NLP tasks, including sentiment analysis, named entity recognition, news classification, and question answering, while also offering new opportunities for Bloomberg Terminal clients to manage the vast quantities of market data.

DeepSeek

DeepSeek LLM is an advanced language model developed focusing on bilingual capabilities, covering both English and Chinese. It is available in two variants: a 7 billion parameter model and a much larger 67 billion parameter version. Each of these comes in 'base', 'chat' or ‘instruction’ variations.

DeepSeek LLM stands out for its impressive performance in several key areas. For instance, the 67B Base model has demonstrated superior capabilities in reasoning, coding, math, and Chinese comprehension, outperforming similar-sized models like Llama2 70B Base. On the other hand, the 7B Chat model has shown exceptional proficiency in coding and math, evidenced by its performance on various benchmarks.

Let me know in the comments if you have any other interesting LLMs for Finance in mind.

II. A guide to help you decide which LLM or TinyLLM is best suited for your project.

In this article, I already shared Open-source models that can be hosted on your infrastructure, but let me open a small bracket before we dive into the different Open-source LLMs.

When choosing between LLM and TinyLLM, one of the most important factors to consider is whether you are using cloud technology and are willing to share your information with an LLM provider such as OpenAI for GPT-3.5 or GPT-4. If the answer is yes, I would recommend GPT-3.5 as it is easy to implement, reduces time to market and offers good value for money.

If you want to learn more about the decision process between ChatGPT and open-source LLM, I recommend you to read the following article.

How to choose between ChatGPT and Open Source LLMs in Finance

Learn from yesterday, live for today, hope for tomorrow. The important thing is not to stop questioning - Albert Einstein Many consulting companies present LLMs and GenAI products to CEOs, CFOs, COOs, and CTOs. While these products may seem appealing, companies should remember the distinction between using ChatGPT with…

To shed light on the practical application of an LLM in various scenarios, let me introduce three different use cases where LLMs can be effectively utilized. It's important to note that all of these use cases are deployed on-premise, without relying on any cloud infrastructure, and can run on your GPU cluster.

Coding in Finance with LLM

Large Language Models (LLMs) can revolutionize coding in finance by automating script generation for data analysis and reporting or for back-end web services. LLMs streamline the coding process, making it more efficient and error-free.

In any case, at this moment (25/12/2023), I'd choose DeepSeeker models for their specialization in English or Chinese coding and understanding.

Internal Chatbot

LLMs power sophisticated internal chatbots in finance, providing immediate, intelligent responses to employee inquiries. These chatbots can handle various questions. They are programmed to understand specific financial jargon and workflows, making them invaluable for improving operational efficiency, reducing response times, and aiding in effective knowledge management within financial organizations.

When considering the infrastructure for your needs, two models stand out as good options: Mistral and Zephyr-7B, deployed in the RAG system. Both of these models excel in understanding human questions and generating well-structured responses. However, if you require a model with more common knowledge, you may want to consider a larger model.

Mail writer

LMs serve as advanced email writers in the finance sector, drafting professional, contextually appropriate correspondences. They help compose emails for various financial scenarios, such as client communication, investment summaries, and regulatory compliance updates. This application significantly enhances productivity by saving time and reducing the likelihood of miscommunication in critical financial matters.

So if you are looking for a straightforward email writing tool, I suggest choosing a 7B parameter, which is sufficient for most purposes. I would also advise training the LLM on your emails to maintain consistency with your company's writing style. This will enable the model to personalize its suggestions and maintain your writing style.

III. RAG or Fine-tuning an LLM/TinyLLM

Retrieval Augmented Generation (RAG)

Standing at the crossroads of innovation and tradition, the financial services industry welcomes a groundbreaking development: Retrieval Augmented Generation (RAG) AI, or shortly RAG. RAG marks a significant leap forward, bridging the gap between the static knowledge base of conventional LLMs and the ever-evolving, dynamic nature of the financial sector.

At its core, RAG is a hybrid solution, combining the strengths of AI-powered information retrieval systems with the generative power of LLMs. This fusion allows RAG to access external, continuously updated knowledge sources, ensuring that its responses are linguistically coherent and rooted in the latest, fact-based information. Unlike traditional LLMs that require extensive retraining to update their knowledge base, RAG’s internal knowledge can be seamlessly modified, keeping pace with the rapid changes characteristic of the financial world.

The illustration below highlights the process utilized to bring the knowledge of today, the knowledge of private documents, to the power of linguistic capabilities provided by LLMs.

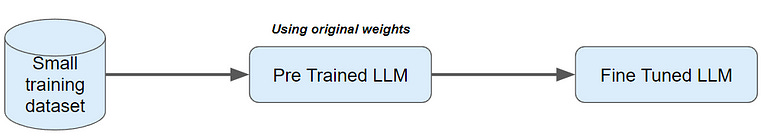

Fine-tuning an LLM

Fine-tuning large language models for specific tasks or domains as a core step means we adapt the model’s learned representations to the target task by training it on specific data. This training boosts the model’s performance and gives it task-specific skills.

This involves using specific data, like “Answer/Question” or “User/Agent” interactions, to tailor the LLM to individual needs. An example is presented in the image below.

Fine-tuning doesn’t rival original training. Given the LLM’s foundational training on vast data, it’s hard to compete.

The knowledge you infuse is task-specific — perhaps tailored for customer interactions — but transferring entirely new knowledge is challenging.

For more detailed information about RAG, I recommend reading the following article.

Retrieval Augmented Generation (RAG) is Changing the Game in Finance

Sam Altman isn’t the CEO of OpenAI anymore. Let the world decide how this will impact the world of AI. Will it be positive or negative? The time will show us. To this day, let's enjoy the moment of AI. We live in a moment where everyday innovations and revolutions appear, Large Language Models (LLMs) on every corner, new GPTs publications on every stree…

IV. Conclusion

Models like Mistral, Zephyr, FinGPT, BloomGPT, and DeepSeeker, each with their unique strengths and parameter sizes, offer tailored solutions for different financial tasks. The choice between these models depends on the complexity of the task, the required general knowledge, and the available infrastructure.

For instance, Mistral and Zephyr excel in conversational AI, while FinGPT and BloomGPT specialize in financial data analysis. DeepSeeker's bilingual capabilities further extend the range of applications, particularly in coding and math.

The decision-making process involves considering factors like the model's size, specific task requirements, and whether to use proprietary models like GPT-3.5 or open-source alternatives.

This series has provided insights into implementing TinyLLMs in finance, discussed the pros and cons of various models, and offered guidance on choosing the right LLM or TinyLLM for specific financial projects.

P.S.: The integration of techniques like Retrieval Augmented Generation (RAG) and model fine-tuning further enhances the adaptability and efficiency of these AI tools in addressing the dynamic needs of the financial sector.

As we move forward, the evolution and refinement of these models will undoubtedly continue to transform how financial data is analyzed and utilized, making AI an indispensable ally in finance.