The Impact of Interpretable AI and GenAI in Finance

Interpretable AI and the Role of ChatGPT or Open-Source LLMs like Llama2 in Finance to enable it

You might think that “Interpretable AI” is the same as “Explainable AI,” but it isn’t.

Interpretable AI inherently provides understandable insights into its decision-making process, while explainable AI involves additional techniques or tools to clarify how black-box models arrive at their conclusions.

For those who recently started following me and missed one of my first articles about “Explainable AI in Finance,” I recommend reading it.

Explainable AI in Finance

Last week, I published an article about Generative AI in Finance, the first of three topics. In this article, I talked about how Generative AI is making waves in the financial sector, with its potential to create new content and automate complex tasks.

Among the various facets of AI, interpretable AI stands out for its potential to transform how financial institutions operate, make decisions, and interact with customers, and now, with the boost we’ve seen in 2023 with GenAI, LLM, ChatGPT, Llama2, etc… this is far more reachable than I’ve would ever imagine.

Let me tell you something: My name is Christophe, and I work as a manager of the data science and research team in a bank. I built this team from scratch seven years ago. During this time, we have witnessed various stages of success and failure. There were moments when our work was not trusted, understood, or believed in. In most cases, the reasons behind these situations were either:

Can the cause and effect be determined? → Interpretability

Can the significance of a feature in influencing the overall model performance be determined? → Explainability

And let me tell you another wisdom: Failure has a lot to each, sometimes more than success. However, success provides prominence, while failure often reverses to something horrible. Yet, don’t miss the chance to learn from failure to turn it next time to success - Never miss a chance to learn.

I am telling you that because in the context of AI, a model often goes to production with missing the chance to be interpreted later on by the users. Success can quickly lead to failure.

In 2022/2023, the emergence of Large Language Models (LLMs) such as ChatGPT marked a significant milestone in the AI journey. These models offer new possibilities but also raise essential considerations regarding interpretable AI. I will provide more information about this potential solution later, but before we get down to some theory.

1. Let’s break the Knowledge Barrier

I. What is Interpretability?

Interpretability refers to understanding and explaining how financial machine learning models make predictions or decisions. This concept is crucial in a field where decisions based on these models can have significant monetary and strategic impacts.

It's about making the workings of complex algorithms transparent and understandable. This means tracing and communicating the reasoning behind a model's decisions or predictions. The goal is to balance data processing and human-understandable explanations, ensuring stakeholders can trust and effectively use the insights these models provide.

Accomplishing this in finance is pivotal as it makes high-performing machine learning models less scared and more adopted by the users, the business experts.

Achieving interpretability is crucial in this form of investment due to various factors.

Ensuring transparency: Financial institutions are under the watch of regulators and must be able to justify their decisions. Whenever a model recommends a particular stock, deciding whether a person should receive a loan or not, the question of “Why” and “How” has to be provided in case of investigations.

Ensuring accountability: In high-risk scenarios, such as evaluating loan defaults, the cost of inaccurate prediction can be enormous. Interpretable models allow for a more straightforward assessment of responsibility and errors.

Enabling innovation and competitivity: Just as Billy Beane's approach in 'Moneyball' challenged traditional methods in baseball scouting, finance professionals who leverage interpretable models can disrupt conventional practices, offering novel insights and strategies that outperform traditional models.

It may sound good always to enable interpretability, but there are downsides to consider. It's important to understand that there are always trade-offs and limitations, even with the best intentions.

The catch in this case is that enabling interpretability may limit the use of specific models, such as neural networks, which are not currently easily interpretable. Here is a list of interpretable and less interpretable models.

Interpretable Models

Linear Regression: This model is straightforward, explaining relationships between variables with a linear approach. Its simplicity allows for a straightforward interpretation of how input variables affect the output.

Logistic Regression: Similar to linear regression but used for classification problems. It provides clear insights into how each feature influences the probability of a specific outcome.

Decision Trees: These models break down data by making decisions based on feature values. They are highly interpretable as they mimic human decision-making processes.

Rule-Based Systems: These involve sets of if-then rules. Their transparent logic flow makes them easily interpretable.

Naive Bayes Classifiers: Based on Bayes' Theorem, they are simple and provide a clear understanding of how probabilities are adjusted based on feature values.

Less Interpretable Models

Neural Networks/Deep Learning: These models involve complex interconnected nodes (neurons) structures. Their "black-box" nature makes it challenging to discern how specific inputs affect outputs.

Random Forests: Although based on decision trees, the ensemble nature of random forests (many trees voting on the outcome) complicates the interpretability.

Gradient Boosting Machines (GBM): These are also based on decision trees but are more complex due to sequential model building and corrections, making them less interpretable.

Support Vector Machines (SVMs): Especially with non-linear kernels, SVMs can become complex and challenging to interpret, as the transformations in feature space are not straightforward.

Recurrent Neural Networks (RNNs): Used in sequential data processing (like language translation), their internal state and feedback loops make them less interpretable.

II. What is Explainability?

In contrast to interpretability, explainability in machine learning refers to the degree to which the internal mechanics of a model can be understood and articulated. This concept is particularly relevant in models like neural networks, which often operate as "black boxes".

For instance, in predicting life expectancy for life insurance purposes, a highly explainable model would allow us to understand the relative importance of various inputs such as age, BMI, smoking history, and career category. In an explainable model, each factor's contribution to the prediction (e.g., 40% career, 35% smoking history) would be clear.

While not always necessary for a model's function, explainability is crucial for validating and trusting its decisions, especially in high-stakes applications. It involves deciphering how significant each node is in the model's decision-making process, enhancing transparency and accountability in its predictions.

For more information, check out my article about Explainable AI in Finance.

III. What is GenAI or LLM?

GenAI (Generative AI) Unlike traditional AI, generative AI (GenAI) is a type of AI technology capable of generating new information, such as text, images, and sound, based on vast amounts of data used during learning. This ability allows GenAI to create content often indistinguishable from what humans produce. It's beneficial in fields like marketing, which can generate personalized advertisements, or in entertainment, where it can create unique music or artwork. Additionally, GenAI can be used in educational settings to produce customized learning materials or in the medical field to simulate patient scenarios for training purposes. With advancements in machine learning and data processing, GenAI continues to evolve, becoming more sophisticated and finding new applications in various industries. With GenAI, we can automatically respond to certain emails, open specific tickets, summarize Excel files and reports, analyze calls for tenders, etc.

LLM (Large Language Model) LLM is a type of GenAI specifically designed to understand human language and generate text. Think of it as a knowledgeable friend who has read a vast library and can discuss almost any subject, write articles, and answer questions. These models are trained on diverse texts, enabling them to grasp language structure, context, tone, and even cultural nuances. This makes LLMs particularly effective in applications like customer service, where they can provide quick, contextually relevant responses, or in content creation, where they can generate articles, stories, or even poetry. The versatility of LLMs extends to language translation, making them invaluable tools in global communication. As technology advances, LLMs are expected to become more nuanced in understanding and replicating human emotions and subtleties in conversation, leading to more natural and engaging interactions in both digital and real-world applications.

For more information, check out my article “Generative AI in Finance.

II. “Solving” the black box problem with GenAI

I want to clarify that what I am about to suggest for reducing the black-box problem's immersiveness is only an idea at this point. It hasn't been tested or implemented yet, but I believe that we may see LLM utilized for this purpose shortly, and if this article is only helping to give a leading direction, I am more than OK about it.

So, let’s get down to the exciting part.

The solution's core lies in using Large Language Models (LLMs) to generate natural language explanations for decisions made by complex machine learning models in financial contexts, particularly for loan approval processes. This approach meets the critical need for transparency and interpretability in automated decision-making systems.

I. Problem statement:

When working in data science, we often create complex models to address issues such as loan defaults or determining whether or not someone is eligible for a loan. While these models are already available and proven effective, it's important to understand and interpret why a particular decision was made. However, in finance, these powerful models are limited because they are not always easily interpretable, so-called black-box models.

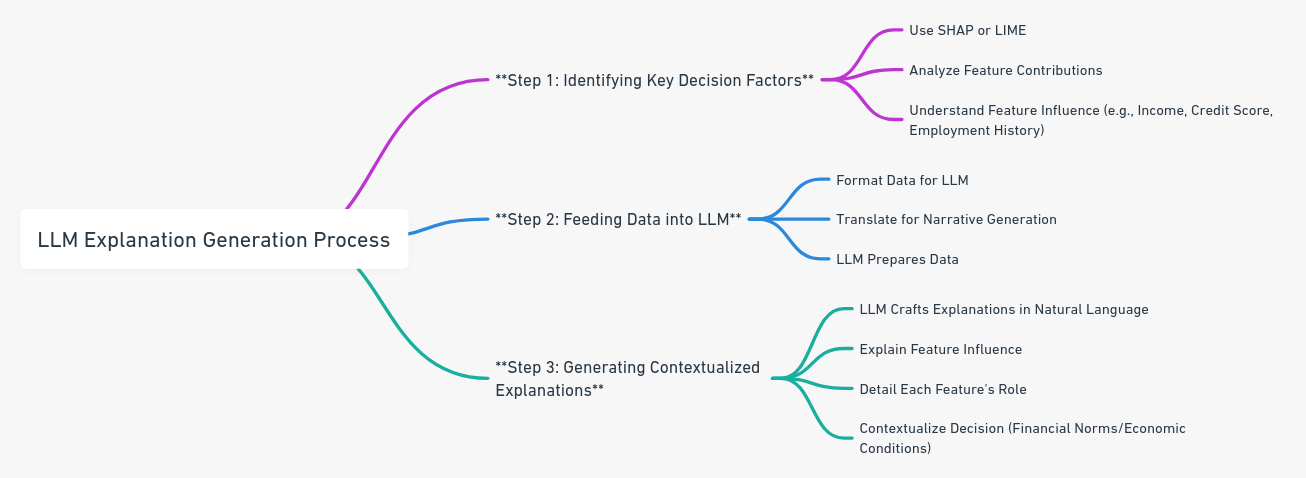

II. Proposed solution: A Three-Step solution based on SHAP/LIME and LLM.

Step 1: Identifying Key Decision Factors

Before an LLM can generate explanations, the factors influencing the decision must be identified. This is achieved using techniques like SHAP or LIME, which provide insights into how different features (e.g., income, credit score, employment history) influence the model’s prediction. These techniques break down the prediction to show the contribution of each feature, effectively 'opening up' the black box.

Step 2: Feeding Data into LLM

The data about feature contributions is then formatted and fed into an LLM. This data includes the features, impact values, and the final decision (e.g., loan approved or denied). The challenge here is to translate numerical and categorical data into a format conducive to narrative generation by the LLM.

Step 3: Generating Contextualized Explanations

The LLM uses this data to craft explanations in natural language. These explanations detail how each significant feature influenced the decision. For example, the LLM might explain that a high debt-to-income ratio was a key factor in denying a loan, suggesting potential difficulty in managing additional debt. The explanation can also contextualize the decision within broader financial norms or economic conditions.

Structured Summarization: The LLM converts the feature impact data into a structured summary that forms the basis of the narrative.

Explanation Templates: Predefined templates guide the generation of explanations, ensuring they are consistent and comprehensive.

Customization: The narrative can be tailored to the knowledge level of the intended audience, from laypersons to financial experts.

III. Example of a Detailed Explanation

Consider a scenario where a loan application is declined. The LLM, provided with data showing a high debt-to-income ratio, recent bankruptcy, and a low credit score, generates an explanation:

"The loan application was declined primarily due to a high debt-to-income ratio of 45%. This ratio, significantly above our threshold of 35%, suggests a potential risk in the applicant's ability to manage additional loan repayments. Furthermore, a recent bankruptcy filed within the past two years raises concerns about the applicant’s financial stability and history of meeting financial obligations. Lastly, the credit score of 580 falls below our minimum requirement of 600, reflecting a history of inconsistent financial management. These factors indicate a higher risk profile than our institution typically approves for loans." — Generated by ChatGPT (GPT-4)

The prompt used was the following:

Write a detailed analysis explaining the denial of a loan application. Most important features:

High debt-to-income ratio of 45% compared (Threshold 35%),

Recent bankruptcy within the past two years

Credit score of 580 (Minimum 600).

Highlight these factors as indicators of a high-risk profile and justify why these financial conditions lead to the decision of not approving the loan

IV. Advantages of LLM-Generated Explanations

Transparency: By providing understandable explanations, these narratives demystify the decision-making process.

Trust: Clear explanations help build user trust in automated systems.

Compliance: Detailed narratives can assist in adhering to regulatory standards demanding transparency in automated decision-making.

Customer Relations: Offering clear reasons for a decision, especially in cases of loan denial, can improve customer relations and provide applicants with actionable feedback.

V. Conclusion of the “Solving” the black box problem with GenAI

Integrating Large Language Models (LLMs) in financial decision-making, such as loan approvals, represents a significant stride toward enhancing transparency and accountability in AI-driven systems. However, this innovative idea has challenges and immense potential for future development.

Ensuring that LLMs accurately interpret and communicate the significance of data is a foremost priority. There's a risk that misinterpretations could lead to misleading or incorrect explanations, undermining the system's credibility. Additionally, it's crucial to regularly audit both the LLM and underlying predictive models for biases. This step is essential to ensure that decisions are ethical and non-discriminatory, maintaining the integrity and fairness of automated decision-making.

Another critical aspect is the system’s scalability and ability to adapt to evolving financial trends, regulatory changes, and emerging data patterns. This adaptability is essential to maintaining its relevance and accuracy over time. Moreover, striking a balance between technical accuracy and user-friendly language is necessary. Explanations should be detailed enough to inform yet simple enough for comprehension by non-experts.

Beyond justifying decisions, these explanations also serve an educational purpose. They can guide customers in understanding the factors affecting their financial health and decision-making processes. In an era of increasing scrutiny over AI in decision-making, these systems can exemplify a commitment to ethical standards and regulatory compliance, especially in sensitive sectors like finance.

Incorporating feedback mechanisms allows for the continuous refinement of the accuracy and clarity of the explanations provided, enhancing user trust and system effectiveness. The potential of this approach extends beyond finance, with applicability in areas like human resources and legal decision-making, where transparency is equally critical.

Looking ahead, tailoring explanations to the recipient's background knowledge could enhance understanding and relevance, making the narratives more impactful. Introducing interactive elements would allow users to engage more deeply with the explanations, seeking clarifications and delving into specific decision aspects. Moreover, reflecting the dynamic nature of the financial world, the system could offer updated explanations as conditions or individual circumstances change, ensuring that the information remains current and relevant.

While there are challenges like ensuring accuracy, managing biases, maintaining scalability, and ensuring user-friendliness, integrating LLMs in financial decision-making is a promising step forward. This approach holds the potential to enhance transparency and accountability in AI systems and expand into various domains, continuously evolving with advancements in AI and user feedback. It could significantly reshape how decisions are made and understood in critical sectors, paving the way for a more informed, ethical, and transparent future in automated decision-making.

Conclusion

Historically, AI in finance was primarily focused on data analysis and predictive modeling, aiding in tasks like market analysis, risk assessment, and fraud detection. However, introducing LLMs has expanded AI’s horizons, bringing sophisticated language understanding and generation capabilities into the mix. This shift has enhanced existing applications and paved the way for innovative solutions in customer service, financial reporting, and more personalized financial advisory services.

Interpretability in AI refers to understanding and trusting the models' decisions and predictions. The need for interpretable AI becomes crucial in finance, where decisions can have significant economic impacts. Traditionally, many AI models in finance have been critiqued as 'black boxes' due to their lack of transparency. This opacity can be a significant hurdle in risk management, regulatory compliance, and customer trust. Interpretable AI models, by contrast, allow users to understand how decisions are made, ensuring that these decisions are justifiable, fair, and compliant with regulatory standards.

Open-source LLMs like Llama2 or proprietary such as GPT-4 are at the forefront of this interpretability drive. These models are advanced in language processing capabilities and designed to focus on transparency and user understanding. Being open-source allows for greater scrutiny and customization, enabling financial institutions to tailor these models to their specific compliance and operational needs. AI-powered models such as ChatGPT have been effectively utilized in a number of financial institutions, showcasing the potential of AI in improving customer interactions and operational efficiency. However, we can take this a step further by ensuring the interpretability of other powerful models, different from ChatGPT, making them more widely available for critical financial processes.

The finance sector is heavily regulated, with laws and regulations like the GDPR and EU AI Act imposing strict transparency and ethical standards requirements. They enable the development of AI systems where decisions can be audited and explained, which is crucial for maintaining accountability and ethical standards.

As the financial sector continues to embrace these technologies, the focus will remain on leveraging AI's potential responsibly and ethically, ensuring that it serves the industry's and its customers' best interests. The future of finance with AI is bright filled with possibilities for more inclusive, efficient, and transparent financial services.